Introduction

Software testing is no longer what it used to be.The rise of Generative AI has dramatically reshaped how testers approach quality assurance. From automating repetitive tasks to intelligently generating test data and scenarios, AI is now an integral part of the modern testing landscape.

What is Generative AI ?

At its core, Generative AI refers to algorithms that can create new content—be it text, images, or even code—based on patterns learned from existing data. This innovation is powered by models like GPT (Generative Pre-trained Transformer) and Diffusion Models, which excel at understanding and generating human-like responses or outputs.

When applied to software testing, Generative AI tools can:

- Write automated test cases

- Predict potential bugs

- Simulate real-world usage scenarios

- Analyze user feedback for hidden issues

- Create synthetic test data

“Generative AI gives testers a second brain—one that doesn’t sleep, miss bugs, or forget edge cases.”

Let’s look at how traditional QA transforms when integrated with AI-driven capabilities:

| Traditional Testing | With Generative AI |

| Manual test case writing | Automated test case generation |

| Static test data | Synthetic, dynamic test data |

| Reactive bug finding | Predictive bug detection |

| Repetitive testing | Smart, context-aware testing |

| Linear testing cycles | Adaptive and evolving test strategies |

In 2024, more than 40% of QA teams globally have adopted some form of AI-powered testing—a number expected to cross 70% by 2026, according to Gartner.

Why Should Testers Care?

As companies push for faster releases, zero-bug tolerance, and cost efficiency, testers who understand Generative AI will hold a competitive edge. They won’t just execute tests—they’ll guide AI to test smarter, deeper, and faster.

This guide is designed to help you, whether you’re a manual tester, automation engineer, or QA analyst, build a clear roadmap to mastering Generative AI in software testing.

What Is the Difference Between AI and ML in Testing?

AI (Artificial Intelligence) is the broader concept of machines simulating human thinking. ML (Machine Learning), on the other hand, is a subset of AI that learns patterns from data without being explicitly programmed.

For testers, this means:

- AI can drive bots to simulate user actions.

- ML helps predict failures or suggest the most probable test paths based on historical data.

Think of AI as the umbrella, while ML is one of its strongest ribs.

Why Do Testers Need to Understand Machine Learning?

Understanding machine learning is essential because:

- It helps testers interpret AI-driven insights.

- You’ll be able to evaluate model performance—such as false positives/negatives.

- You can train or fine-tune models for test case prioritization, defect prediction, or risk-based testing.

What Are the Common ML Algorithms Useful in Testing?

| Algorithm | Use Case in Testing |

| Decision Trees | Predicting test case failure or defect probability |

| Random Forest | Classifying bug severity based on past bug reports |

| K-Means Clustering | Grouping similar test cases or user sessions for analysis |

| Naive Bayes | Spam/ham classification for user feedback |

| Neural Networks | Advanced prediction for performance bottlenecks |

Do Testers Need to Learn Programming to Use AI Tools?

Not necessarily. Many modern AI-powered testing tools come with no-code or low-code interfaces. However, basic scripting knowledge (like Python or JavaScript) helps in customization and advanced integrations.

How Does Generative AI Improve the Testing Lifecycle?

Generative AI is a game-changer for testers. It’s not just about saving time—it’s about transforming how testing is done across every phase of the software development lifecycle (SDLC).

Let’s break it down by lifecycle stages

| Testing Phase | Traditional Approach | With Generative AI |

| Requirement Analysis | Manual reading & interpretation | AI auto-summarizes & extracts testable points from documents |

| Test Planning | Based on guesswork or past experience | Predictive planning based on historical data & trends |

| Test Case Design | Manually written cases | Auto-generation of test cases using app behavior or user flows |

| Test Data Creation | Static, reused data sets | AI generates dynamic, edge-case-rich test data |

| Test Execution | Scripted automation or manual execution | Self-evolving test scripts, adaptive based on changes |

| Bug Reporting | Manual logging | AI summarizes logs, identifies root causes, suggests fixes |

| Maintenance | Breaks with every update | AI auto-heals broken scripts with context awareness |

What Are the Top Benefits of Generative AI for Testers?

Here are the core advantages testers gain from using Generative AI:

- Speed: AI can write 100+ test cases in the time it takes a human to write one.

- Accuracy: Reduces human errors, especially in repetitive or data-heavy tests.

- Adaptability: AI evolves as the code changes, making it ideal for agile environments.

- Coverage: It uncovers hidden edge cases and corner scenarios humans often miss.

- Insights: AI learns from past defects and predicts new ones before they happen.

How Can AI Help with Compliance and Security Testing?

Generative AI can simulate malicious inputs or create edge-case payloads that test an app’s resilience. For testers working in fintech, healthcare, or banking, this is gold.

Key highlights:

- Synthetic PII generation to test data privacy logic

- Security fuzzing inputs to expose injection vulnerabilities

- Policy compliance mapping for GDPR, HIPAA, and other standards

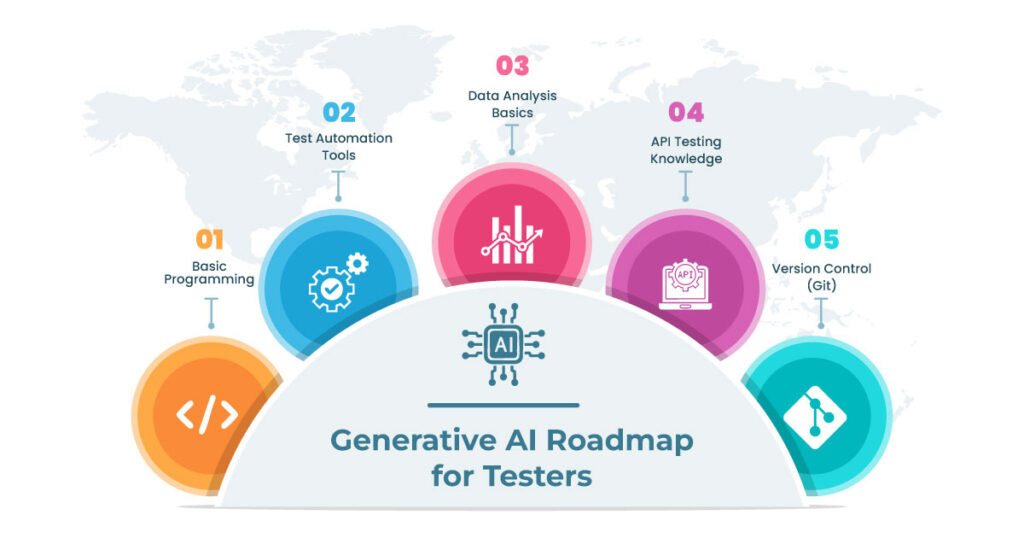

What Skills Do Testers Need to Succeed in an AI-Driven Testing World?

You don’t need to be an AI engineer to work with Generative AI. But if you want to stay relevant, you’ll need to pick up a few essential AI-friendly testing skills.

Let’s break them into categories:

Core Technical Skills for Testers

| Skill | Why It Matters |

| Basic Programming | Needed to integrate or customize AI tools (e.g., Python, JavaScript, Java) |

| Test Automation Tools | Tools like Selenium, Playwright, or Cypress integrate well with AI frameworks |

| Data Analysis Basics | Helps you interpret model outputs and debug ML predictions |

| API Testing Knowledge | Many AI systems interact via APIs—knowing how to test them is crucial |

| Version Control (Git) | You’ll collaborate more with devs and data teams—Git is a must for syncing work |

AI & ML Skills to Future-Proof Your Career

- Understand ML Terminology: Terms like overfitting, precision, recall, F1-score

- Model Behavior Testing: Know how to test a model’s decisions, not just UI

- Prompt Engineering: Crafting clear, test-relevant prompts to tools like ChatGPT

- Bias Testing: Learn how to identify unfair or inconsistent AI behaviors

Soft Skills That Matter Even More in AI Context

-

- Critical Thinking: Can you question AI suggestions or identify false positives?

- Curiosity: Are you eager to try tools, fail fast, and learn iteratively?

- Collaboration: Working with AI/ML teams, devs, and product managers is now part of the testing role

- Adaptability: With AI evolving fast, your ability to unlearn and relearn is gold

Can Testers Upskill Without a Data Science Background?

Yes—absolutely. In fact, here’s a simple roadmap:

- Start with AI basics on YouTube or Coursera (free courses)

- Practice prompt engineering with tools like ChatGPT or Testim.io

- Use AI-enhanced tools (Testim, Mabl, Katalon) in small projects

- Try low-code AI model builders like Teachable Machine or IBM Watson

- Explore open datasets to understand how AI models learn

Explore More: 10 Must-Know Skills For MERN Stack Developers

Recommended Tools for Skill-Building

| Tool/Platform | Purpose |

| ChatGPT | Prompt testing, test case generation |

| Testim.io | AI-based UI testing automation |

| Applitools | AI-driven visual validation testing |

| Google Colab | Practice ML using real Python notebooks |

| Kaggle | Play with datasets, learn ML through challenges |

Checklist: Do You Have the AI Testing Skill Set?

✅ Can you write clear prompts for tools like ChatGPT?

✅ Do you understand the difference between classification and regression models?

✅ Are you comfortable validating the AI-generated test output?

✅ Can you read and respond to data logs from AI testing tools?

✅ Are you continuously learning through courses, communities, or challenges?

AI for Bug Prediction & Code Review

| Tool | Use Case |

| Codacy | AI code quality analysis |

| DeepCode (Snyk) | ML-based static code analysis |

| SonarQube | Analyzes code and flags bugs + vulnerabilities |

These tools are great allies during unit testing and code integration stages. Testers can use them to predict weak spots or confirm developer changes.

Synthetic Test Data Generators (AI-Powered)

- Tonic.ai: Generates production-like synthetic data for testing without compromising privacy

- Mockaroo: Quickly generates structured test data in multiple formats

- Datomate: Custom AI-based test data engine—good for large enterprise projects

Collaboration & Prompt Engineering Tools

- Notion AI: Ideal for documenting test strategies, summarizing defect reports

- Jasper AI: Generates test documentation, release notes, or QA reports

- PromptLoop: Integrates AI with spreadsheets to generate or analyze test cases

How to Choose the Right AI Tool for Testing?

| Need | Recommended Tool |

| Want to write and maintain UI tests | Testim.io, Mabl |

| Need bug predictions or code analysis | DeepCode, Codacy |

| Require synthetic data at scale | Tonic.ai, Mockaroo |

| Looking for visual layout checks | Applitools |

| Want to document with AI assistance | Jasper, Notion AI |

Can These Tools Integrate with CI/CD Pipelines?

Yes, most modern AI testing tools support integration with Jenkins, GitHub Actions, GitLab CI, and more. This means your AI-generated tests can run automatically during deployment, ensuring quality gates are in place without slowing you down.

Building a Generative AI Roadmap for Testers: Step-by-Step Guide

How Can Testers Get Started with Generative AI?

Here’s a clear, 5-step roadmap for testers to confidently integrate Generative AI into their work:

Step 1: Learn the Basics of AI in Testing

- Read up on AI/ML fundamentals.

- Explore how AI is applied in real test scenarios.

- Follow QA communities sharing AI use cases.

Tool tip: Try ChatGPT to write sample test cases and analyze logs.

Step 2: Identify Repetitive Tasks You Can Automate

Focus on what’s eating your time. Start with:

- Test case generation

- Test data creation

- Bug triaging

Think: “What tasks could an intelligent assistant do better or faster?”

Step 3: Choose 1 AI Tool to Experiment With

Don’t get overwhelmed by choices. Pick one that fits your context:

- For UI testing: Testim or Mabl

- For visual bugs: Applitools

- For prompt-driven automation: ChatGPT + Python

Start small. One feature. One module. One sprint.

Step 4: Upskill Consistently

Dedicate 30–60 mins weekly to:

- Try hands-on tutorials

- Take short AI testing courses (Test Automation University, Coursera)

- Join webinars from AI-focused testing platforms

Learning in small bursts keeps you ahead.

Step 5: Collaborate & Document Outcomes

Involve devs, product owners, and data teams. Let AI enhance QA conversations, not replace them.

- Document test coverage improvements

- Highlight AI-detected bugs missed manually

- Share lessons and refine workflows

Conclusion: The Future Belongs to Testers Who Adapt

The future of QA is not just about finding bugs—it’s about being a quality partner empowered by AI. Generative AI tools won’t replace testers. They’ll replace testers who don’t use AI.

So if you’re a tester today, tomorrow’s challenge isn’t learning 10 new tools—it’s learning how to think with AI, test smarter, and lead innovation from the QA front.

Start your journey now. The roadmap is here. The rest is execution.

TestLeaf’s Generative AI for QA Engineers Training is the fastest way to upskill.

The course blends real-world projects, practical tools, and expert coaching—so you can move from learning to applying in weeks, not months.

Future-ready testers don’t wait. They build.

Start your journey now—because the smartest testers in the room will be the ones working with AI, not against it.

FAQ’s

Do Testers Need to Learn Programming to Use AI Tools?

Not necessarily. Many modern AI-powered testing tools come with no-code or low-code interfaces. However, basic scripting knowledge (like Python or JavaScript) helps in customization and advanced integrations.

Is AI Going to Replace Testers?

No, it won’t. AI is here to augment, not replace. Think of it as a smart assistant. Testers still need to:

- Define quality standards

- Validate model suggestions

- Bring human intuition into the loop

What Tools Should Testers Explore to Understand AI?

- Google Colab – for ML hands-on projects

- Teachable Machine by Google – to create simple models visually

- Kaggle – to explore datasets and ML use cases

- ChatGPT + Python – to generate test cases and debug scripts

How Does Generative AI Improve the Testing Lifecycle?

Generative AI is a game-changer for testers. It’s not just about saving time—it’s about transforming how testing is done across every phase of the software development lifecycle (SDLC).

Let’s break it down by lifecycle stages:

| Testing Phase | Traditional Approach | With Generative AI |

| Requirement Analysis | Manual reading & interpretation | AI auto-summarizes & extracts testable points from documents |

| Test Planning | Based on guesswork or past experience | Predictive planning based on historical data & trends |

| Test Case Design | Manually written cases | Auto-generation of test cases using app behavior or user flows |

| Test Data Creation | Static, reused data sets | AI generates dynamic, edge-case-rich test data |

| Test Execution | Scripted automation or manual execution | Self-evolving test scripts, adaptive based on changes |

| Bug Reporting | Manual logging | AI summarizes logs, identifies root causes, suggests fixes |

| Maintenance | Breaks with every update | AI auto-heals broken scripts with context awareness |

What Are the Top Benefits of Generative AI for Testers?

Here are the core advantages testers gain from using Generative AI:

- Speed: AI can write 100+ test cases in the time it takes a human to write one.

- Accuracy: Reduces human errors, especially in repetitive or data-heavy tests.

- Adaptability: AI evolves as the code changes, making it ideal for agile environments.

- Coverage: It uncovers hidden edge cases and corner scenarios humans often miss.

- Insights: AI learns from past defects and predicts new ones before they happen.

Explore our courses

- Advanced Selenium Training

- Playwright Training

- Gen AI Training

- AWS Training

- REST API Training

- Full Stack Training

- Appium Training

- DevOps Training

- JMeter Performance Training

Author’s Bio:

As CEO of TestLeaf, I’m dedicated to transforming software testing by empowering individuals with real-world skills and advanced technology. With 24+ years in software engineering, I lead our mission to shape local talent into global software professionals. Join us in redefining the future of test engineering and making a lasting impact in the tech world.

Babu Manickam

CEO – Testleaf