I wondered why many test automation scripts fail infrequently and daily? After a deep analysis with more than 20000+ tests, I found that it is not primarily to do with the tools or scripting techniques and neither with the build. So, what causes more failures?

The wrong selection of tests that are automated is the biggest pain today.

Most importantly, the manual testers spend tirelessly testing the product or project with almost daily build today. I have experienced my testing team losing interest in testing creatively over time due to repeated manual (boring) tasks.With test automation in place, testers could be prepared to test the inevitable changes deployed in the new builds.Agree?

Where to Start?

Start automating the tests that are manually tested against each build. Do you mean smoke tests first? Yes, the build verification tests. Let’s see how it makes a difference.

Assume a test case is about 20 steps with few verifications. Let’s say the manual testing effort takes 5 minutes to complete for a single iteration, and the test automation effort for the same test (using Selenium WebDriver) for the single browser (~chrome) may take about 120 to 180 minutes or more, including development, testing, integration, etc.

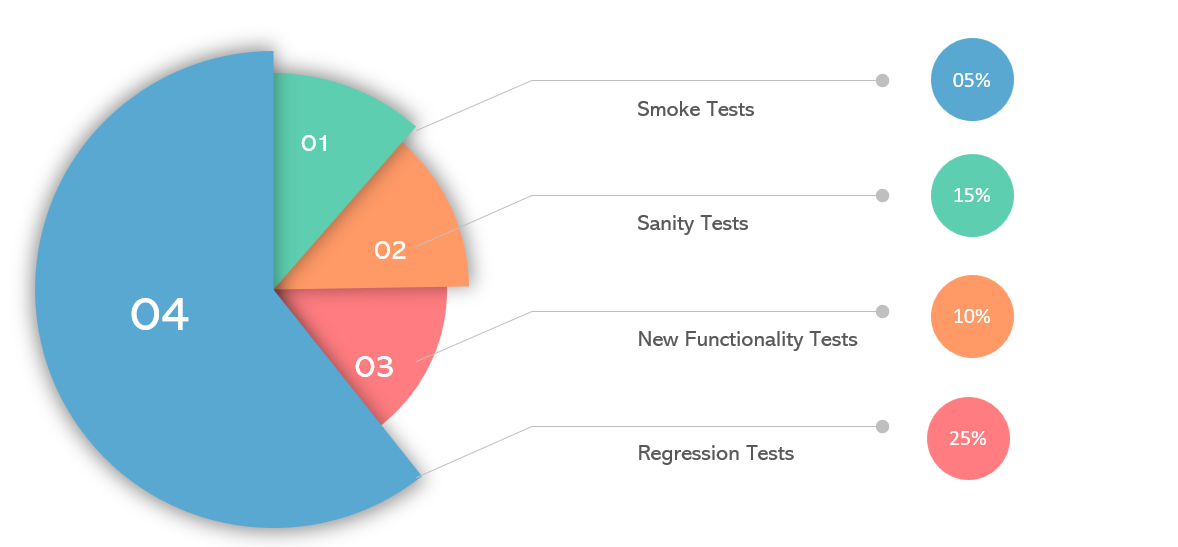

With that said, unless you run your automated test minimally 24 times to get the returns with the hope that the automated test does not break before you achieve your returns. Okay? Per industry data, 5% of the overall test contributes towards the smoke, which sounds like minimal effort. Correct, it is the beginning of automation success.

Can developers use my automated smoke tests?

If the smoke tests are successful and used on daily tests inside test environments and can be as well promoted for use with developers.

Why not? Shift Left.

What next?

Then, move on to sanity tests — most of the business-critical tests that need to be automated to ensure the business cases do not fail in the production environment. It may also be a good idea to automate them for multiple test data. Per industry data, roughly 15% of the overall test contributes toward sanity, which sounds like a decent effort. With smoke and mind, you reach the Pareto rule (approximately 80% of the effects come from 20% of the causes).

Make sense?

Sanity tests are developed, and what then?

You may be tempted to go next. But stay there for a while by executing sanity tests against different browsers, platforms, and different data to confirm the automation stability.

Great idea?

If all goes well, then what?

If all goes well, your automation is working great for you now. Time to step up! Go for the minimal regression suite and maybe new functionalities if you are part of in-sprint automation.

What and how much to automate?

In sum, start automating the minimalism — the smoke and sanity and then grow your automation suite consistently. Remember, the tree does not bear fruit overnight, and neither success happens over a day. So, start small and stay consistent with your test automation.

Would you like to know more about software testing course , learn from Testleaf.