Q.1. How do you rerun only failed scripts in your framework?

Explanation: In my automation framework, selectively rerunning failed scripts involves implementing a test rerun strategy. This allows for the targeted execution of scripts that encountered failures during the initial run.

Use Case: In our project, we had a suite of end-to-end tests. Using a testing framework like TestNG, I incorporated a retry mechanism specifically for failed test cases. For instance, if a test failed due to a transient issue, the framework would automatically rerun that specific test to enhance test reliability.

import org.testng.IRetryAnalyzer;

import org.testng.ITestResult;

public class RetryAnalyzer implements IRetryAnalyzer {

private int retryCount = 0;

private static final int maxRetryCount = 3;

@Override

public boolean retry(ITestResult result) {

if (retryCount < maxRetryCount) {

retryCount++;

return true;

}

return false;

}

}

In TestNG XML configuration: This snippet uses TestNG’s IRetryAnalyzer to allow a maximum of three retries for failed test methods.

Exception: One potential exception is the NullPointerException if the retry logic is not properly initialized or if there’s an issue with the test context. To handle this, I would ensure proper initialization of variables and thorough validation of the test context before attempting retries.

Challenges: One challenge was determining the optimal number of retries. I addressed this by collaborating with the team to strike a balance between catching transient failures and avoiding infinite loops. Additionally, managing dependencies between tests during reruns required careful synchronization, which I achieved through explicit wait strategies to ensure a stable test environment.

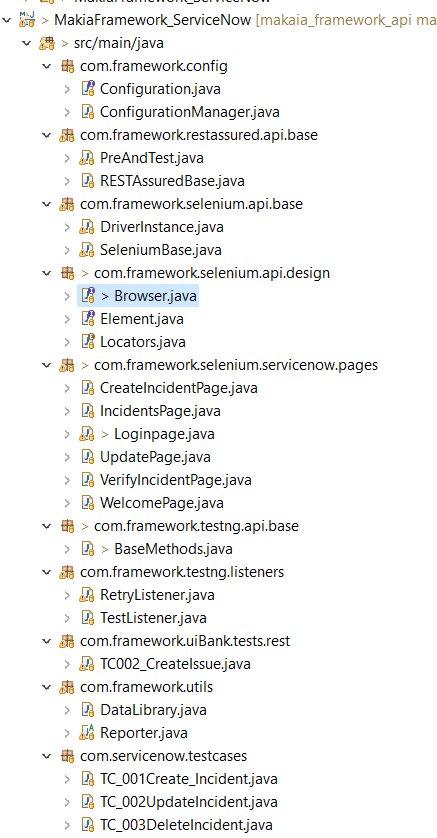

Q.2. Explain the structure of your automation framework.

Explanation: The automation framework’s structure is the foundation that dictates how test scripts, configurations, and supporting elements are organized and executed. It encompasses the directory hierarchy, design patterns, and modular components that collectively form the framework.

Use Case: The LeafBot Hybrid Framework is structured based on the Page Object Model (POM) and incorporates several key components to facilitate efficient and scalable test automation. Selenium WebDriver is employed for web application interaction, TestNG serves as the test execution engine, Apache POI is utilized for data-driven testing by reading data from Excel, Cucumber supports Behavior-Driven Development (BDD) automation, and Extent Report provides a comprehensive HTML automation summary report.

Code Snippet:

This snippet demonstrates a simplified framework structure.

Challenges: One challenge was maintaining a balance between modularity and simplicity. I addressed this by regularly reviewing the framework’s structure, ensuring that it remained scalable while avoiding unnecessary complexity. Additionally, coordinating dependencies between different modules required clear documentation and communication within the team, allowing for seamless collaboration and updates to the framework.

Q.3. What is a POM.xml file, and what is its purpose?

Explanation: The POM.xml (Project Object Model) file is a crucial configuration file in Maven-based projects. It serves as a central management tool, defining the project’s structure, dependencies, plugins, and build settings.

Use Case: In our project, the POM.xml file plays a pivotal role in managing dependencies and plugins which defines Selenium and TestNG dependencies, ensuring that the correct versions are used across all test classes. Additionally, it configures Maven plugins for test execution and reporting.

Code Snippet: Dependencies in pom.xml file: 4.0.0

<groupId>com.example</groupId> <artifactId>your-automation-project</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>1.8</maven.compiler.source> <maven.compiler.target>1.8</maven.compiler.target> </properties> <dependencies> <!-- Selenium dependency --> <dependency> <groupId>org.seleniumhq.selenium</groupId> <artifactId>selenium-java</artifactId> <version>3.141.59</version> </dependency> <!-- TestNG dependency --> <dependency> <groupId>org.testng</groupId> <artifactId>testng</artifactId> <version>7.4.0</version> </dependency> <!-- Other dependencies... --> </dependencies> <build> <plugins> <!-- Maven Surefire Plugin for test execution --> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-surefire-plugin</artifactId> <version>3.0.0-M5</version> </plugin> <!-- Other plugins... --> </plugins> </build>

Exception: One potential exception is the DependencyResolutionException if there are issues resolving dependencies, such as network problems or incorrect repository configurations. To handle this, I would verify the network connectivity, and repository URLs, and ensure that the correct versions of dependencies are specified.

Challenges: One challenge was maintaining consistent versions across dependencies. I addressed this by regularly updating dependencies and using Maven’s version management capabilities to ensure uniformity. Additionally, managing custom configurations for different environments required clear documentation and profiles in the POM.xml file, facilitating seamless configuration switching for diverse testing environments.

Q.4. How does Jenkins fit into your automation framework?

Explanation: Jenkins is a crucial part of our automation framework, serving as a continuous integration and continuous deployment (CI/CD) tool. It automates the build, test, and deployment processes, ensuring seamless integration of code changes and providing a centralized platform for managing and executing automated tests.

Use Case: In our web project, Jenkins is configured to trigger automated test runs whenever there is a code committed to the version control system (e.g., Git). This ensures that tests are automatically executed on each code change, providing rapid feedback to the development team.

Code Snippet: // Jenkinsfile (Declarative Pipeline) example:

pipeline {

agent any

stages {

stage('Checkout') {

steps {

script {

// Checkout code from version control (e.g., Git)

checkout scm

}

}

}

stage('Build') {

steps {

script {

// Maven build

sh 'mvn clean install'

}

}

}

stage('Run Tests') {

steps {

script {

// Execute automated tests

sh 'mvn test'

}

}

}

// Additional stages for deployment, reporting, etc.

}

}

Exception: One potential issue is a BuildFailureException if the automated tests fail during the Jenkins build process. To handle this, I would configure Jenkins to mark the build as unstable or failed based on the test results, allowing the team to investigate and address issues promptly.

Challenges: One challenge was ensuring consistent test environments between local development machines and Jenkins. I addressed this by containerizing test dependencies using tools like Docker and orchestrating the environment setup within Jenkins, ensuring a standardized testing environment. Additionally, coordinating parallel test execution across Jenkins agents required careful distribution of test suites and managing dependencies, which I achieved by leveraging Jenkins’ parallel execution capabilities and optimizing test suite structures.

Q.5. Have you implemented BDD in your project, and if so, how?

Explanation: BDD is an integral part of our automation project, implemented using tools like Cucumber. It involves collaboration between developers, testers, and business stakeholders to define test scenarios in a human-readable format. These scenarios are then automated using Gherkin syntax, promoting a shared understanding of the expected behavior.

Use Case: In our project, we use Cucumber for BDD. we have feature files written in Gherkin syntax that describe user stories or scenarios. These feature files are then associated with step definitions written in Java, where the actual automation logic using Selenium and other tools is implemented.

Scenario: Successful Login Given the user is on the login page When the user enters valid credentials And clicks the login button Then the user should be redirected to the dashboard

Step Definitions (StepDefinitions.java):

import io.cucumber.java.en.Given;

import io.cucumber.java.en.When;

import io.cucumber.java.en.Then;

public class StepDefinitions {

@Given("the user is on the login page")

public void userIsOnLoginPage() {

// Implementation for navigating to the login page }

@When("the user enters valid credentials")

public void userEntersValidCredentials() {

// Implementation for entering valid credentials

}

@When("clicks the login button")

public void userClicksLoginButton() {

// Implementation for clicking the login button

}

@Then("the user should be redirected to the dashboard")

public void userRedirectedToDashboard() {

// Implementation for verifying the user is redirected to the dashboard

}

}

}

Exception: One potential issue is a CucumberException if there are discrepancies between the steps defined in the feature files and the corresponding step definitions. To handle this, I would ensure strict alignment between the feature files and step definitions, regularly reviewing and updating them as needed.

Challenges: One challenge was maintaining the readability of feature files as they grew in complexity. I addressed this by regularly reviewing and refactoring the feature files, ensuring they remained concise and focused on business-readable language. Additionally, coordinating the collaboration between team members, including developers and non-technical stakeholders, required effective communication and documentation, which I facilitated through regular BDD grooming sessions and clear documentation practices.

Q.6. Explain the concept of reporting techniques in your framework.

Explanation: Reporting techniques in our automation framework refer to the mechanisms used to generate and present test execution results in a clear and actionable format. It involves capturing and visualizing relevant information such as test pass/fail status, execution time, and detailed logs to provide insights into the health and performance of the automated test suite.

Use Case: in our project, we use ExtentReports for comprehensive and interactive HTML reporting. After each test run, a detailed report is generated, highlighting test results, step-by-step logs, screenshots for failed tests, and overall test execution statistics. This enhances visibility and assists in quick issue identification and resolution.

Code snippet: // Sample code snippet using ExtentReports in TestNG

import com.aventstack.extentreports.ExtentReports;

import com.aventstack.extentreports.ExtentTest;

import com.aventstack.extentreports.Status;

import com.aventstack.extentreports.markuputils.ExtentColor;

import com.aventstack.extentreports.markuputils.MarkupHelper;

import org.testng.ITestResult;

import org.testng.annotations.AfterMethod;

import org.testng.annotations.BeforeMethod;

public class TestBase {

ExtentReports extent;

ExtentTest test;

@BeforeMethod

public void setUp() {

extent = ExtentManager.createInstance("extent-report.html");

// Additional setup...

}

@AfterMethod

public void tearDown(ITestResult result) {

if (result.getStatus() == ITestResult.FAILURE) {

test.log(Status.FAIL, MarkupHelper.createLabel(result.getName() + " - Test Case Failed", ExtentColor.RED));

test.fail(result.getThrowable());

} else if (result.getStatus() == ITestResult.SKIP) {

test.log(Status.SKIP, MarkupHelper.createLabel(result.getName() + " - Test Case Skipped", ExtentColor.ORANGE));

} else {

test.log(Status.PASS, MarkupHelper.createLabel(result.getName() + " - Test Case Passed", ExtentColor.GREEN));

}

extent.flush();

}

}

Exception: One potential issue is a ReportException if there are problems with the report generation process, such as insufficient permissions to write to the report file. To handle this, I would ensure the necessary file permissions are set, and I would implement robust exception handling mechanisms to gracefully handle and log any issues during the reporting process.

Challenges: One challenge was maintaining a balance between detailed reporting and performance impact. I addressed this by optimizing the reporting configurations, such as selectively capturing screenshots for failed tests and limiting the verbosity of logs for successful tests. Additionally, ensuring consistency in reporting across different environments required thorough configuration management, which I achieved through version control of reporting dependencies and regular synchronization with the development and testing environments.

Q.7. How do you handle dynamic elements that appear after some time?

Explanation: Handling dynamic elements that appear after some time involves implementing effective wait strategies in the automation framework. This ensures synchronization between the test script and the web application, allowing the automation tool to wait for the dynamic element to become present, visible, or interactive before interacting with it.

Use Case: In our project, we often encounter dynamic elements like pop-ups or content that loads asynchronously. To handle this, we use explicit waits with conditions tailored to the specific behavior of the dynamic element. For instance, we may wait for the element to be present in the DOM or become clickable before interacting with it.

Code Snippet:

import org.openqa.selenium.By;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.support.ui.ExpectedConditions;

import org.openqa.selenium.support.ui.WebDriverWait;

public class DynamicElementHandler {

private WebDriver driver;

private WebDriverWait wait;

public DynamicElementHandler(WebDriver driver) {

this.driver = driver;

this.wait = new WebDriverWait(driver, 10); // 10 seconds timeout

}

public void clickDynamicElement(By dynamicElementLocator) {

WebElement element = wait.until(ExpectedConditions.elementToBeClickable(dynamicElementLocator));

element.click();

}

}

Exception: One potential exception is a TimeoutException if the dynamic element does not appear within the specified timeout duration. To handle this, I would adjust the timeout duration based on the expected behavior of the dynamic element and thoroughly analyze the root cause of delays, which might involve optimizing the application or adjusting the test script accordingly.

Challenges: One challenge was dealing with unpredictable loading times for dynamic elements. I addressed this by using a combination of implicit and explicit waits strategically, allowing for flexibility in handling elements that load at different rates. Additionally, coordinating waits across different browsers and environments required regular testing and adjustments, which I achieved through continuous collaboration with the testing team and maintaining a well-documented set of wait strategies tailored to specific scenarios.

Q.8. Explain the difference between Cucumber and TestNG.

Explanation: Cucumber and TestNG are both testing frameworks, but they serve different purposes in the context of automated testing. TestNG is primarily a unit testing framework, whereas Cucumber is a tool for Behavior-Driven Development (BDD), facilitating collaboration between technical and non-technical stakeholders through the use of plain-text feature files.

Use Case: In our project, we use TestNG for traditional unit and functional testing. TestNG allows us to organize and execute test cases, manage dependencies, and parallelize test execution. On the other hand, Cucumber is employed for BDD scenarios. It uses Gherkin syntax to write human-readable feature files that are associated with step definitions written in Java, enabling collaboration with non-technical stakeholders for defining and automating high-level scenarios.

Code Snippet:

// Sample TestNG test class

import org.testng.annotations.Test;

public class TestNGExample {

@Test public void testScenario1() {

// TestNG test logic

}

@Test public void testScenario2() {

// Another TestNG test logic

}

}

Scenario: Successful Login Given the user is on the login page When the user enters valid credentials And clicks the login button Then the user should be redirected to the dashboard This snippet demonstrates a TestNG test class with traditional test methods and a Cucumber feature file defining a BDD scenario.

Exception: One potential issue is a CucumberException if there are discrepancies between the step definitions and feature files. To handle this, I would ensure strict alignment between the Gherkin syntax in the feature files and the corresponding step definitions in Java. Additionally, coordinating parallel execution between TestNG and Cucumber might pose challenges, which I address by managing test suite configurations carefully and optimizing test structures to avoid conflicts.

Challenges: One challenge was maintaining a clear separation between unit tests implemented in TestNG and BDD scenarios in Cucumber. I addressed this by organizing the project structure effectively and keeping unit tests and feature files in distinct packages. Additionally, ensuring consistent reporting across both frameworks required custom reporting configurations, which I achieved by leveraging reporting plugins specific to TestNG and Cucumber and aggregating the results for a comprehensive view.

Q.9. What are the advantages of using the Page Factory pattern in Selenium?

Explanation: The Page Factory pattern in Selenium is a design pattern that enhances the maintainability and readability of test code by providing a mechanism to initialize and interact with web elements in a page object. It leverages annotations and lazy initialization, offering several advantages for efficient and scalable test automation.

Use Case: In our project, we use the Page Factory pattern to create page objects for different web pages. For instance, we have a LoginPage class that represents the login page, with annotated WebElement variables for username, password, and login button. The Page Factory initializes these elements, providing a clean and modular way to interact with the login page in our test scripts.

Code Snippet:

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.support.FindBy;

import org.openqa.selenium.support.PageFactory;

public class LoginPage {

@FindBy(id = "username")

private WebElement usernameInput;

@FindBy(id = "password")

private WebElement passwordInput;

@FindBy(id = "loginButton")

private WebElement loginButton;

public LoginPage(WebDriver driver) {

PageFactory.initElements(driver, this);

}

public void login(String username, String password) {

usernameInput.sendKeys(username);

passwordInput.sendKeys(password);

loginButton.click();

}

}

This snippet demonstrates a simplified LoginPage class using the Page Factory pattern, with annotated WebElement variables and initialization in the constructor.

Exception: One potential issue is a NoSuchElementException if the annotated elements cannot be found in the HTML. To handle this, I would ensure proper synchronization and wait strategies are in place, and I would regularly review and update the page objects based on changes in the application.

Challenges: One challenge was maintaining page objects for dynamic web pages. I addressed this by using dynamic FindBy annotations, such as @FindBy(xpath = “//input[contains(@id, ‘dynamicId’)]”), to handle variations in element attributes. Additionally, coordinating the initialization of page objects across different browsers required careful consideration of browser-specific behaviors, which I achieved through thorough testing and browser-specific configurations within the Page Factory initialization process.

Q.10. Your application needs to support multiple browsers. How would you approach cross-browser testing in your automation framework?

Explanation: Cross-browser testing in our automation framework involves validating the application’s functionality and compatibility across different web browsers. We achieve this by configuring the framework to execute tests on various browsers, handling browser-specific behaviors, and optimizing test scripts to ensure consistent and reliable results.

Use Case: in our project, we use TestNG’s parallel execution capabilities to run the same test suite concurrently on multiple browsers, such as Chrome, Firefox, and Safari. We have browser-specific configurations, and we leverage conditional logic in our test scripts to handle variations in browser behaviors, ensuring a seamless cross-browser testing experience.

Code Snippet:

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.chrome.ChromeDriver;

import org.openqa.selenium.firefox.FirefoxDriver;

import org.openqa.selenium.safari.SafariDriver;

import org.testng.annotations.AfterMethod;

import org.testng.annotations.BeforeMethod;

import org.testng.annotations.Parameters;

public class TestBase {

private WebDriver driver;

@Parameters("browser")

@BeforeMethod

public void setUp(String browser) {

switch (browser.toLowerCase()) {

case"chrome":

System.setProperty("webdriver.chrome.driver", "path/to/chromedriver");

driver = new ChromeDriver();

break;

case"firefox":

System.setProperty("webdriver.gecko.driver", "path/to/geckodriver");

driver = new FirefoxDriver();

break;

case"safari":

driver = new SafariDriver();

break;

// Additional cases for other browsers...

}

}

@AfterMethod

public void tearDown() {

if (driver != null) {

driver.quit();

}

}

}

This snippet demonstrates a simplified TestBase class with browser configuration based on TestNG parameters for cross-browser testing.

Exception: One potential issue is a WebDriverException if there are problems initializing the WebDriver instance for a specific browser. To handle this, I would ensure that the WebDriver binaries (e.g., chromedriver, geckodriver) are correctly configured and accessible, and I would implement proper exception handling to provide meaningful error messages.

Challenges: One challenge was dealing with browser-specific behaviors impacting test stability. I addressed this by maintaining a comprehensive set of browser-specific configurations and utilizing conditional statements in the test scripts to handle variations. Additionally, ensuring synchronization across different browser versions required regular testing and updates, which I achieved through continuous monitoring of browser releases and compatibility testing during the development lifecycle.

Q.11. Explain a situation where you would need to perform headless browser testing. How would you implement it in your automation framework?

Explanation: Headless browser testing is valuable in scenarios where a visible browser window is not required, such as in server environments or when running tests on machines without a graphical user interface. It allows for faster test execution and efficient resource utilization. In our automation framework, we implement headless browser testing to enhance performance and streamline test runs in such scenarios.

Use Case: In our project, we have a nightly regression test suite that runs on a headless server environment to validate the application’s stability. By using headless browsers like Chrome or Firefox, we can execute tests without the need for a graphical interface, optimizing resource consumption and allowing for faster and more efficient continuous integration.

Code Snippet:

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.chrome.ChromeDriver;

import org.openqa.selenium.firefox.FirefoxDriver;

import org.openqa.selenium.safari.SafariDriver;

import org.testng.annotations.AfterMethod;

import org.testng.annotations.BeforeMethod;

import org.testng.annotations.Parameters;

public class TestBase {

private WebDriver driver;

@Parameters("browser")

@BeforeMethod

public void setUp(String browser) {

switch (browser.toLowerCase()) {

case"chrome":

System.setProperty("webdriver.chrome.driver", "path/to/chromedriver");

driver = new ChromeDriver();

break;

case"firefox":

System.setProperty("webdriver.gecko.driver", "path/to/geckodriver");

driver = new FirefoxDriver();

break;

case"safari":

driver = new SafariDriver();

break;

// Additional cases for other browsers...

}

}

@AfterMethod

public void tearDown() {

if (driver != null) {

driver.quit();

}

}

}

Exception: One potential issue is a SessionNotCreatedException if the specified browser options or arguments are not supported. To handle this, I would ensure that the browser options are compatible with the selected WebDriver version and review the official documentation for any changes or updates.

Challenges: One challenge was debugging and troubleshooting issues in headless mode. I addressed this by incorporating additional logging mechanisms in the test scripts and configuring the test environment to capture screenshots or videos of headless test runs for in-depth analysis. Additionally, ensuring consistency in test results between headless and non-headless modes required meticulous validation of assertions and careful handling of timing issues, which I achieved through thorough test script reviews and adjustments.

Q.12. Your application has multiple environments (e.g., development, staging, production). How do you configure your automation framework to run tests in different environments?

Explanation: Configuring the automation framework for different environments involves adapting the test scripts to interact with distinct application instances, URLs, databases, or other environment-specific settings. In our framework, we utilize configuration files, environment variables, or testNG parameters to dynamically handle environment-specific configurations and ensure seamless execution across development, staging, and production environments.

Use Case: in our web project, we use TestNG parameters to specify the target environment for test execution. For instance, we can run a suite of tests against the development environment by providing the corresponding environment parameter, and the framework dynamically adjusts the base URL, database connections, or other settings accordingly.

Code Snippet:

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.chrome.ChromeDriver;

import org.openqa.selenium.firefox.FirefoxDriver;

import org.testng.annotations.AfterMethod;

import org.testng.annotations.BeforeMethod;

import org.testng.annotations.Parameters;

public class TestBase {

private WebDriver driver;

@Parameters({"browser", "environment"})

@BeforeMethod

public void setUp(String browser, String environment) {

String baseUrl;

switch (environment.toLowerCase()) {

case"development":

baseUrl = "http://dev.example.com";

break;

case"staging":

baseUrl = "http://staging.example.com";

break;

case"production":

baseUrl = "http://www.example.com";

break;

default:

throw new IllegalArgumentException("Invalid environment specified: " + environment);

}

switch (browser.toLowerCase()) {

case"chrome":

System.setProperty("webdriver.chrome.driver", "path/to/chromedriver");

driver = new ChromeDriver();

break;

case"firefox":

System.setProperty("webdriver.gecko.driver", "path/to/geckodriver");

driver = new FirefoxDriver();

break;

// Additional cases for other browsers...

}

// Set the base URL for the test

driver.get(baseUrl);

}

@AfterMethod

public void tearDown() {

if (driver != null) {

driver.quit();

}

}

}

This snippet demonstrates a simplified TestBase class with dynamic environment configuration based on TestNG parameters for flexible test execution across different environments.

Exception: One potential issue is an IllegalArgumentException if an invalid environment is specified. To handle this, I would implement proper validation of the provided environment parameter and provide informative error messages for quick resolution.

Challenges: One challenge was maintaining consistency in environment configurations across different teams and development branches. I addressed this by centralizing environment configuration files, promoting standardization, and documenting best practices for configuration management. Additionally, handling environment-specific data, such as user credentials, required secure and flexible storage mechanisms, which I achieved through encrypted configuration files and integration with secure credential management tools.

Q.13. Your automation framework needs to handle an increasing number of test cases. How would you design your framework to ensure scalability and maintainability?

Explanation: To ensure scalability and maintainability in handling an increasing number of test cases, the automation framework needs to be designed with modular, flexible, and efficient structures. It involves breaking down the framework into distinct components and implementing practices that allow easy scalability and maintenance.

Use Case: In our web project we have a common scenario which is user authentication. In this case, I would create a modularized Page Object for handling the login functionality. This module can be reused across multiple test cases, ensuring a consistent and efficient approach to authentication throughout the project.

Code Snippet:

public class LoginPage {

private WebDriver driver;

private By usernameField = By.id("username");

private By passwordField = By.id("password");

private By loginButton = By.id("loginBtn");

public LoginPage(WebDriver driver) {

this.driver = driver;

}

public void login(String username, String password) {

driver.findElement(usernameField).sendKeys(username);

driver.findElement(passwordField).sendKeys(password);

driver.findElement(loginButton).click();

}

}

Exception:

A potential exception that might occur is a NoSuchElementException if the specified web elements (usernameField, passwordField, loginButton) cannot be located on the page. Handling this exception involves proper synchronization techniques or using explicit waits to ensure the elements are present before interacting with them.

Challenges:

One challenge was maintaining a clear separation of concerns as the number of test cases increased. To address this, I implemented the Page Object Model (POM) to encapsulate page-specific functionality, ensuring a modular and organized structure. Another challenge was handling dynamic elements or changes in the application UI. I addressed this by implementing dynamic element locators and regular reviews of the Page Objects to adapt to UI changes promptly.

Q.14. How do you collaborate with developers and other team members in an agile environment?

Explanation: Collaborating with developers and team members in an agile environment involves fostering open communication, transparency, and shared responsibility to deliver high-quality software. It includes active participation in agile ceremonies, continuous feedback, and adapting to changes efficiently.

Use Case:

In our project where a new feature is being developed. As a QA engineer, I would participate in sprint planning sessions to understand the user stories, provide input on testability, and identify potential test scenarios. During daily stand-ups, I would communicate progress, discuss any impediments, and collaborate with developers on bug fixes or improvements.

Challenges:

One challenge was maintaining synchronized workflows with developers, especially when requirements changed rapidly. To address this, I advocated for regular sync-ups, embraced adaptive planning, and actively participated in refinement sessions to ensure shared understanding. Another challenge was handling divergent opinions on the definition of done. To overcome this, I facilitated discussions to align on a common understanding of acceptance criteria, emphasizing the importance of delivering not just functional but high-quality features.

Q.15. Describe a situation where you had to prioritize tasks in a high-pressure environment. How did you manage your time and deliver quality results?

Explanation: Prioritizing tasks in a high-pressure environment involves assessing the urgency and importance of each task to allocate time and resources effectively. It requires quick decision-making, focusing on critical activities, and delivering quality results under tight deadlines.

Use Case: In a critical release cycle, there was a sudden influx of high-priority defects reported by users. To address this, I quickly conducted a risk assessment to identify the defects impacting the core functionality and affecting the largest user base. Prioritizing these critical defects over less impactful issues ensured that the most pressing problems were addressed first.

Code Snippet: While task prioritization is more about strategic decision-making, a code snippet related to time management or logging for tracking priorities might be relevant:

public class TaskManager {

public static void main(String[] args) {

// Simulating task prioritization prioritizeTasks("Critical bug fix: Login issue");

prioritizeTasks("Feature development: New user registration");

prioritizeTasks("Enhancement: UI improvements");

// Simulating logging for tracking priorities

logPriority("Critical bug fix: Login issue", Priority.HIGH);

logPriority("Feature development: New user registration", Priority.MEDIUM);

logPriority("Enhancement: UI improvements", Priority.LOW);

}

private static void prioritizeTasks(String task) {

// Simulate task prioritization logic

System.out.println("Prioritizing task: " + task);

}

private static void logPriority(String task, Priority priority) {

// Simulate logging task priorities

System.out.println("Task: " + task + ", Priority: " + priority);

}

enum Priority {

HIGH, MEDIUM, LOW

}

}

Challenges: One challenge was dealing with conflicting priorities and expectations from different stakeholders. To address this, I initiated clear communication channels, engaging with stakeholders to align priorities based on business impact and urgency. Another challenge was managing the psychological pressure associated with high-stakes deadlines. To overcome this, I adopted stress-management techniques, including breaking down tasks into smaller, manageable steps, and leveraging support from the team to distribute the workload effectively.

Author’s Bio:

As CEO of TestLeaf, I’m dedicated to transforming software testing by empowering individuals with real-world skills and advanced technology. With 24+ years in software engineering, I lead our mission to shape local talent into global software professionals. Join us in redefining the future of test engineering and making a lasting impact in the tech world.

Babu Manickam

CEO – Testleaf